Nexus at ETHDenver

Building the rails for verifiable finance ETHDenver has always been more than a conference. It’s a pressure test for

AI is evolving fast — faster than many realize. For those paying attention, it’s obvious that we’re in the midst of exponential change. But what does exponential really mean when applied to artificial intelligence?

To help visualize this, we highly recommend checking out ai-2027.com, a remarkable site that quantifies and animates the trajectory of AI development. The interactive timeline is both fascinating and sobering: professional-level coding by January 2026, and superhuman coding by February 2027.

More broadly, ai-2027.com forecasts that we are approaching the dawn of Artificial General Intelligence (AGI), and look toward Artificial Superhuman Intelligence (ASI). They present their forecast in the form of a narrative centered on the question of alignment between human interests and a future shaped by AI usage.

The story envisions a future where frontier AI does complex work, but since it is untrusted, we use less powerful, more trustworthy earlier generation AI to verify that work.

A central question is whether there is a cost asymmetry between the power of AI thinking and the capability of AI verification. In the story alignment hinges on verification of good intent, and they describe two possible endings, one where the answer is "yes" and another where it fails with severe consequences for humanity.

At Nexus, we believe that verifiability is an absolutely necessary and critical defensive technology to develop and deploy. That’s why we launched the Nexus Verifiable AI Lab. We believe that we need to accelerate the pace of our work and the pace of industry collaboration so that verifiable systems can provide balance against the exponential impacts of AI.

A central principle underpinning the work we do at Nexus is that verifying work can be easier than doing it. Consider a crossword puzzle — it might be difficult to solve, but once a solution is proposed, it’s straightforward to check if it’s right. This cost asymmetry is leveraged in many domains: multiple choice exams, tax audits, and ticket inspections all rely on verification being cheap enough to prevent cheating.

In the realm of software, decades of scientific research on verifiable computation including our own team’s work shows that all software execution can be verified cheaply.

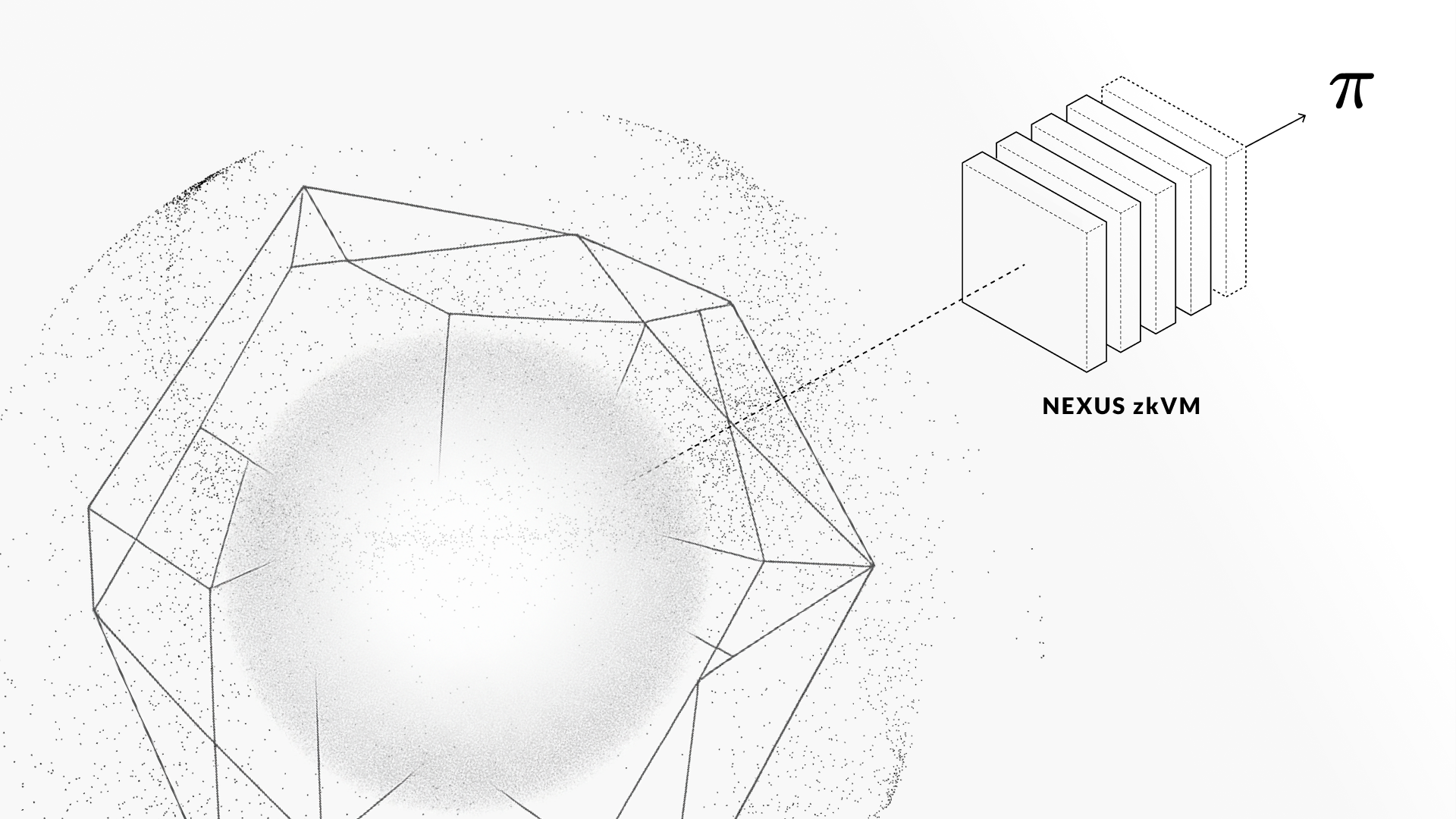

Here’s how it works: Suppose a supercomputer performs a complex computation and produces an output. How do we trust that result? With our zkVM (zero-knowledge virtual machine), the output comes with a proof. That proof guarantees the output was computed correctly according to the specified program. Verification can happen in milliseconds — even for hours or days of computation. The catch? Generating the proof takes extra work. But once it’s done, verification is fast and certain.

We are starting to see applications of verifiable computing to demonstrate data has been processed correctly. A prominent example in the blockchain space are rollups, where instead of running a costly computation directly on a blockchain, you compute the result outside the blockchain and submit the end result to the blockchain with a cheap to verify proof of correctness.

Not every problem neatly presents itself in a form that is primed for verifiable computation. Consider harder-to-verify scenarios: a manager evaluating employee performance, or a citizen judging political leadership. These are messy, ambiguous, and full of competing narratives. As AI technology evolves, it will grow in importance. AI will be part of analysis and decision making, AI will generate and curate the data we rely on, and in some cases AI will be used to confuse rather than illuminate, e.g., by making up fake data.

This is where we believe our lab’s mission truly begins. The Nexus Verifiable AI Lab exists to confront this messy reality.

As AI becomes deeply embedded in society—writing code, generating media, making decisions—we must develop ways to ensure trust, accountability, and provenance. In his thought-provoking blog post on techno-optimism, Vitalik Buterin makes the case that in times of change the focus should be on developing defensive technology first. An example of a defensive technology in the context of fake news and misinformation is the C2PA standard developed by the Coalition for Content Provenance and Authenticity, which we covered in our blog post on verifiable media.

The Nexus Verifiable AI Lab will take on challenges such as:

Verifiable software

The rise of AI copilots in software development — from code completion to full-stack implementation — is rapidly reducing the role of human oversight in production pipelines. While these systems boost productivity, they also introduce a new kind of opacity: Who wrote this function? What assumptions were embedded in the code? Can we trust the output if no human ever reviewed it?

The Nexus Verifiable AI Lab will focus on building verifiable software pipelines, where every contribution — human or machine — can be audited, attributed, and tested against explicit guarantees. By integrating cryptographic proofs, formal verification methods, and transparent logging into the development process, we’re working toward a future where even autonomous code generation is anchored in verifiable truth.

Data provenance

We’re entering an era where most media online — images, videos, audio, and even sensor data — may be synthetic. As AI-generated content floods our feeds and devices, distinguishing real from fake is no longer a luxury — it’s a requirement for civic trust, scientific reproducibility, and platform integrity.

The Nexus Verifiable AI Lab will tackle this through verifiable data provenance. This means embedding cryptographic fingerprints, origin metadata, and proof-of-lineage into the data itself. Whether it’s a photojournalistic image or a medical training dataset, we’re developing the infrastructure to prove where data came from, how it was transformed, and by whom. Our aim is to build the technical foundation for a web where authenticity is natively verifiable — not retroactively inferred.

AI-for-AI verification

As frontier AI models become more capable, they also become less interpretable. The cutting edge in model performance increasingly trades off against transparency, creating a critical need for systems that can independently evaluate these black boxes.

The Nexus Verifiable AI Lab will pioneer techniques in AI-for-AI verification — where smaller, interpretable, and trusted models are used to verify the behavior of more powerful, opaque ones. These verifiers can check for bias, hallucination, safety violations, or goal alignment. Combined with cryptographic attestations, this approach forms a layered verification architecture: powerful systems are not left to self-regulate; they are continuously audited by verifiable peers.

We believe that building verification into the foundation of AI development is one of the best ways to meet its complexity with clarity. Learn more about the new Nexus Verifiable AI Lab in our launch post or on the lab’s new web page.

Join us. Let’s make verifiability a pillar of the AI future.