USDX, Powered by M0: The Native Dollar of the Verifiable Economy

In today’s fragmented stablecoin landscape, many systems are either chasing liquidity or retrofitting incentives. Traders face operational complexity, developers

We’re excited to announce the launch of the Nexus Verifiable AI Lab, a new research and development division of Nexus dedicated to exploring the frontier of verifiability, economics, and artificial intelligence in order to expand the boundaries of human and machine cooperation.

To meet one of the most urgent challenges of our time — managing and leveraging AI responsibly — we need more than ideas. We need action. And we need to start building.

The Nexus Verifiable AI Lab is where verifiable AI moves from theory into practice. It's where cryptographic proof becomes a foundational part of how AI models are built, deployed, and trusted. It's where safety and fairness are not just goals — they are properties that can be independently audited and cryptographically guaranteed.

The world cannot afford another generation of black-box systems. The future needs infrastructure built on a new kind of trust — one where verification is built in.

The Nexus Verifiable AI Lab will help us get there.

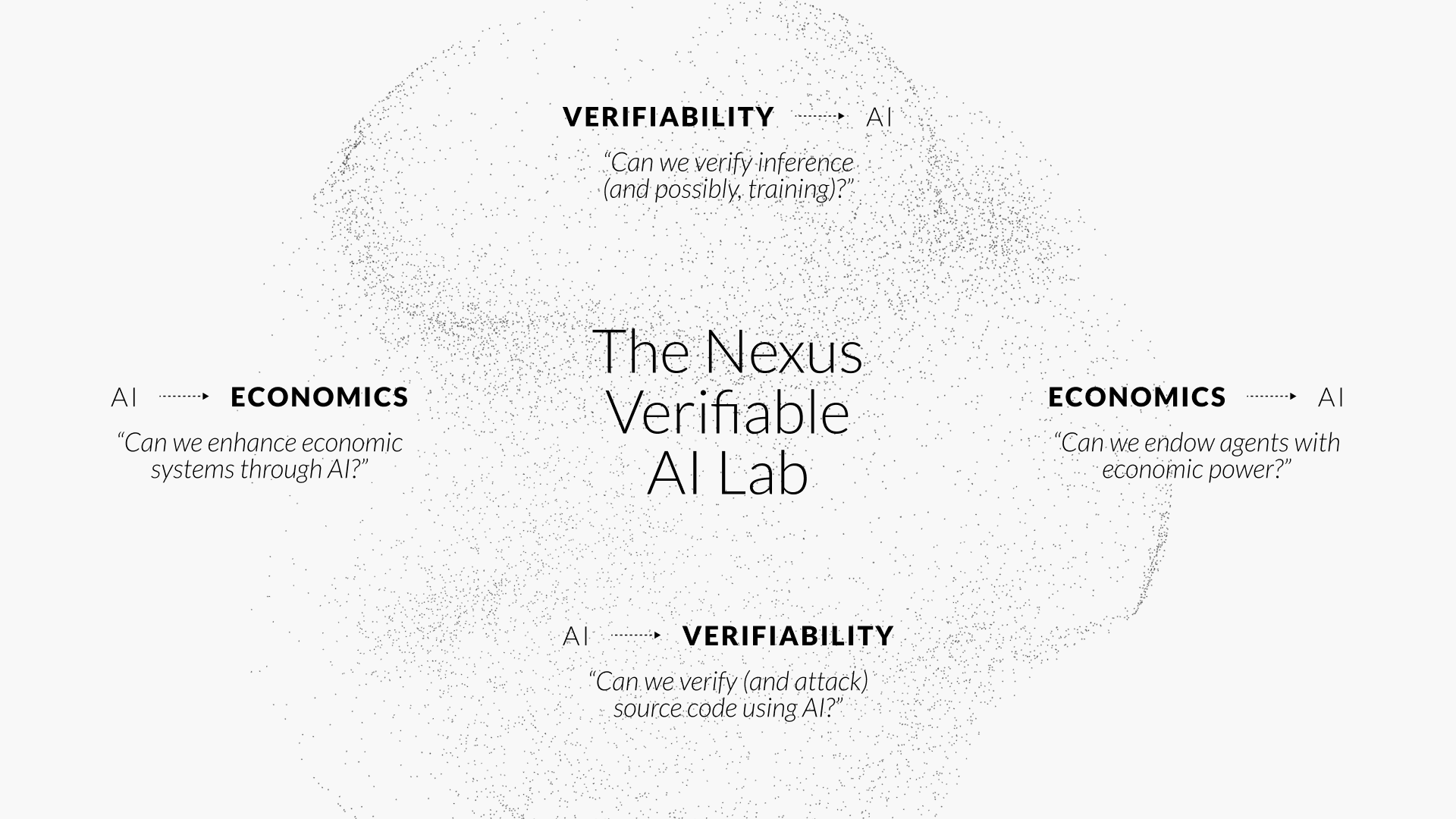

The Nexus Verifiable AI Lab will focus on four key questions that work as expanding feedback loops:

Verifiability → AI

Auditable AI, compliant by design.

Modern AI systems are powerful — but opaque. We rarely know exactly what data they were trained on, which version was deployed, or whether the output we see was actually generated by the model we tested. In regulated domains like healthcare, finance, and law, this opacity is a dealbreaker.

At the Nexus Verifiable AI Lab we will develop systems that make inference and training provable. Using techniques like zero-knowledge proofs, cryptographic attestations, and secure hardware, we aim to build AI pipelines that can be audited without exposing sensitive data — combining transparency, compliance, and privacy.

Imagine being able to cryptographically prove that a loan denial wasn’t biased, or that a medical diagnosis came from a model with certified training data. These aren’t theoretical goals — they’re emerging capabilities.

2. AI → Verifiability

Machine intelligence as the ultimate auditor.

As software systems grow more complex — from smart contracts to decentralized applications — verifying their correctness becomes harder and more expensive. What if AI could help?

We’re exploring how large language models and agentic AI systems can assist in formal verification: identifying vulnerabilities, proving smart contract safety, or even verifying the logical consistency of other AI models. In red-team/blue-team simulations, attacker AIs attempt to break protocols while defender AIs patch them — a dynamic, reinforcement-learning-powered environment for hardening digital infrastructure.

In a world where exploits move fast, verification needs to move faster.

3. Economics → AI

From passive assistants to economic actors.

Why can’t Siri buy you coffee? Why can’t your AI negotiate a contractor rate, subscribe to a service, or rebalance your portfolio?

The answer isn’t just technical — it comes back to infrastructure. Today’s economic systems are not designed for autonomous agents. The Nexus Verifiable AI Lab is researching how AI agents can safely participate in the economy: initiating payments, signing contracts, managing wallets, and complying with real-world protocols like KYC and AML — all while remaining provably aligned with user intent.

We see a future where your AI doesn’t just answer questions — it handles logistics, closes deals, and transacts on your behalf. The missing link is verifiability.

4. AI → Economics

What happens when AI gets a seat at the policy table?

If markets are systems, could intelligent agents help manage them better? Could autonomous models regulate supply chains, balance incentives, or even govern monetary policy?

These aren’t just hypotheticals. At Nexus, we’ll experiment with the Nexus Economic Machine — a living laboratory where AI agents interact with programmable currencies and onchain economic protocols. It's a testbed for what it would mean to embed intelligence directly into the financial fabric of the internet.

We’re exploring what that world could look like, and how to make it verifiable, auditable, and human-aligned.

AI is no longer confined to theoretical use cases. It’s making decisions in public, managing capital, shaping narratives, and mediating trust. As these systems scale in power and presence, the need for verifiable intelligence becomes existential.

The Nexus Verifiable AI Lab is our commitment to meeting that need — not with slogans, but with systems. We’re building the infrastructure for an AI-powered future that doesn’t just ask for trust — it proves it. From cryptographic guarantees to autonomous agents, from red-teaming reason to regulating economies, this is where the hard problems live. And this is where we intend to solve them.

We build in public and we build with the best. Our current partners building with AI include: Pi Squared, Nethermind, Lilypad, Rena Labs, Hyperbolic, IO.net, Gaib, Hetu, Xtrace, and Public AI.

If you’re a builder, a researcher, or a visionary asking the same questions, we’d love to hear from you.

Learn more at nexus.xyz/ai-lab.